Exploring Shape vs Texture Bias in Visual Transformers

Figure from Geirhos et al. 2018

Figure from Geirhos et al. 2018

Advisors: Andreas Kist & Bernhard Egger

For the course Computational Visual Perception

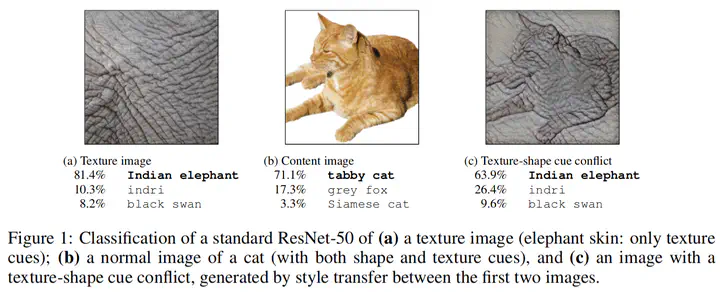

Geirhos et al. 2018 showed that Convolutional Neural Networks (CNNs) trained on ImageNet are biased towards texture while humans are biased towards shape for a classification task. They further showed that training the same CNN on Stylized-ImageNet increases the model’s shape bias. We extend the work by showing that the shape bias increases further for Vision Transformer models (DeIT) and helps in closing the gap further to human performance on shape related tasks (eg. Shape-Texture cue conflict test set).